Creating Linux Virtual Servers

Creating Linux Virtual Servers

Wensong Zhang, Shiyao Jin, Quanyuan Wu

National Laboratory for Parallel & Distributed Processing

Changsha, Hunan 410073, China

Email: wensong@iinchina.net

Joseph Mack

Lockheed Martin

National Environmental Supercomputer Center

Raleigh, NC, USA

Email: mack.joseph@epa.gov

http://proxy.iinchina.net/~wensong/ippfvs

0. Introduction

Linux Virtual Server project.

The virtual server = director + real_servers

multiple servers appear as one single fast server

Client/Server relationship preserved

-

IPs of servers mapped to one IP.

-

client see only one IP address

-

servers at different IP addresses believe they are contacted directly by

the clients.

Installation/Control

Patch to kernel-2.0.36 (2.2.x in the works)

ippfvsadm (like ipfwadm) adds/removes servers/services

from virtual server. Used in

-

command line

-

bootup scripts

-

failover scripts

ippfvs = "IP Port Forwarding & Virtual Server" (from the name for Steven

Clarks' Port Forwarding codes)

code based on

-

Linux kernel 2.0 IP Masquerading

-

2.0.36 kernel (2.2.x under way)

-

port forwarding - Steven Clarke

single port services (eg in /etc/services, inetd.conf)

tested

-

httpd

-

ftp (not passive)

-

DNS

-

smtp

-

telnet

-

netstat

-

finger

-

Proxy

-

nntp (added on 26 May 1999, it works)

protocols

If the service listens on a single port, then LVS is ready for it.

additional code required for - IP:port sent as data, two connections,

callbacks.

ftp requires 2 ports (20,21) - code already in the LVS.

Load Balancing

The load on the servers is balanced by the director using

-

Round Robin (unweighted, weighted)

-

Least Connection (unweighted, weighted)

GPL, released May 98 (GPL) http://proxy.iinchina.net/~wensong/ippfvs

1. Credits

LVS

-

loadable load-balancing module - Matthew Kellett matthewk@rebel.com

-

kernel 2.2 port, prototype for LocalNode feature - Peter Kese peter.kese@ijs.si

-

"Greased Turkey" document - Rob Thomas rob@rpi.net.au

-

Virtual Server Logo -"Spike" spike@bayside.net

-

chief author and developer -Wensong Zhang wensong@iinchina.net

High Availability

-

mon - server failures

-

heartbeat/fake - director failures

-

mon - Jim Trocki, http://www.kernel.org/software/mon

-

Fake (gratuitous ARP) http://linux.zipworld.com.au/fake/

-

heartbeat - Alan Robertson, http://www.henge.com/~alanr/ha

-

Coda - Peter Braam, http://www.coda.cs.cmu.edu/

2. LVS Server Farm

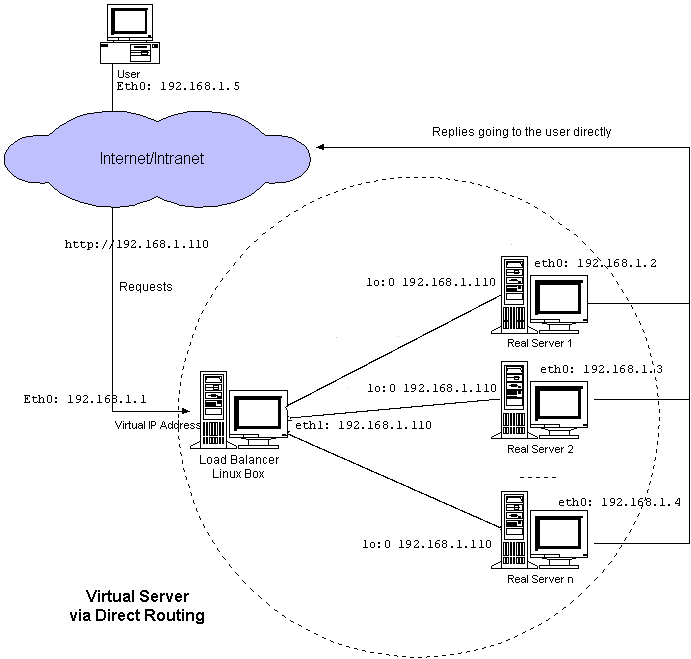

Figure 1: Architecture of a generic virtual server

The director inspects the incoming packet -

-

new request : looks for next server, creates an entry in a table

pairing the client and server.

-

established connection: passes packet to appropriate server

-

terminate/timeout connection: remove entries from table

The default table size is 2^12 connections (can be increased).

Gotchas (for settting up, testing)

-

Minimum 3 machines, client, director, server(s)

-

cannot access services from director, need client

-

access from server to service is direct

3. Related Works

Existing request dispatching techniques can be classified into the following

categories:

-

Berkeley's Magic Router, Cisco's LocalDirector (tcpip NAT, for VS-NAT)

-

IBM's TCP router

-

ONE-IP (all servers have same IP, no rewriting of reply packets, kernel

mods to handle IP collisions)

-

Parallel SP-2 (servers put router IP as source address of packets, no rewriting

of reply packets, server kernel mods)

-

IBM's NetDispatcher (for VS-Direct Routing)

-

EDDIE, pWEB, Reverse-proxy, SWEB (requires two tcpip connections)

3. Director Code

kernel compile options: Director communicates with real servers by one

of

-

VS-NAT (Network Address Translation) based on ip-masquerade code

-

VS-TUN - via IP Tunnelling

-

VS-DR - via Direct Routing

Figure 1: Architecture of a generic virtual server

4.1. VS-NAT - Virtual Server via NAT

popular technique for allowing access to another network by many machines

using only one IP on that network. Multiple computers at home linked to

internet by ppp connection. Whole company on private IP's linked through

single connection to internet.

VS-NAT: Diagnostic Features

-

servers can have any OS

-

director, real servers on same private net

-

default route of real servers is director(172.16.0.1) - replies return

by route they came

-

packets in both directions are rewritten by director

VS-NAT Example

ippfvsadm setup

ippfvsadm -A -t 202.103.106.5:80 -R 172.16.0.2:80

ippfvsadm -A -t 202.103.106.5:80 -R 172.16.0.3:8000 -w 2

ippfvsadm -A -t 202.103.106.5:21 -R 172.16.0.2:21

Rules written by ippfvsadm

| Protocol |

Virtual IP Address |

Port |

Real IP Address |

Port |

Weight |

| TCP |

202.103.106.5 |

80 |

172.16.0.2 |

80 |

1 |

| 172.16.0.3 |

8000 |

2 |

| TCP |

202.103.106.5 |

21 |

172.16.0.3 |

21 |

1 |

Example: request to 202.103.106.5:80

Request is made to IP:port on outside of Director

load balancer chooses real server (here 172.16.0.3:8000), updates VS-NAT

table, then

| packet |

source |

dest |

| incoming |

202.100.1.2:3456(client) |

202.103.106.5:80(director) |

| inbound rewriting |

202.100.1.2:3456(client) |

172.16.0.3:8000(server) |

| reply to load balancer |

172.16.0.3:8000(server) |

202.100.1.2:3456(client) |

| outbound rewriting |

202.103.106.5:80(director) |

202.100.1.2:3456(client) |

VS-NAT Advantages

-

Servers - any OS

-

No mods for servers

-

servers on private network (no extra IPs needed as add servers)

VS-NAT Disadvantages

-

NAT rewriting, 60usec, rate limiting

throughput = tcp packet size (536 bytes)/rewriting time (pentium

60usec) = 9Mbytes/sec = 72Mbps = 100BaseT

25 servers will average 400KBytes/sec each

4. VS-TUN - Virtual Server via IP Tunneling

Normal IP tunneling (IP encapsulation)

-

IP datagram encapsulated within IP datagrams

-

IP packets carried as data through an unrelated network

-

At end of tunnel, IP packet is recovered and forwarded

-

connection is symmetrical - return packet traverses original route in reverse

direction.

Tunnelling used

-

through firewalls

-

IPv6 packets pass through IPv4

-

laptop access home network from foreign network

VS-Tunnelling

-

director encapsulates and forwards packets to real servers.

-

servers process request

-

servers reply directly to the requester by regular IP. The return

packet does not go back through the director.

For ftp, http, scalability

-

director is fast (no rewritting)

-

requests are small (eg http - "GET /bigfile.mpeg", ftp - get bigfile.tar.gz)

-

replies are larger than request and often very large

-

100Mbps director can feed requests to servers each on their own 100Mbps

network (100's of servers)

VS-TUN Diagnostic features

-

all nodes (director, servers) have an extra IP, the VIP

-

request goes to VirtualIP (VIP:port), not to IP on outside of Director

-

director, VIP is eth0 device

-

servers, VIP is tunl device

-

tunl device must not reply to arps (Linux 2.0.x OK, 2.2.x not OK)

-

client connects to virtual server IP (tunl doesn't reply to arp, the eth0

device on director connects)

-

replies from eth0 device on real server go to IP of client, ie use normal

routing

-

servers must tunnel

-

servers can be geographically remote, different networks

Figure 4: Architecture of a virtual server via IP tunneling

Routing Table

Director

link to tunnel

/sbin/ifconfig eth0:0 192.168.1.110 netmask 255.255.255.255 broadcast

192.168.1.255 up

route add -host 192.168.1.110 dev eth0:0

ippfvsadm setup (one line for each server:service)

ippfvsadm -A -t 192.168.1.110:80 -R 192.168.1.2

ippfvsadm -A -t 192.168.1.110:80 -R 192.168.1.3

ippfvsadm -A -t 192.168.1.110:80 -R 192.168.1.4

Server(s)

ifconfig tunl0 192.168.1.110 netmask 255.255.255.255 broadcast

192.168.1.255

route add -host 192.168.1.110 dev tunl0

| packet |

source |

dest |

data |

| request from client |

192.168.1.5:3456(client) |

192.168.1.110:80(VIP) |

GET /index.html |

| ippfvsadm table is src 192.168.1.110, dest 192.168.1.2, director looks

up routing table, makes 192.168.1.1 src, encapsulates |

192.168.1.1(director) |

192.168.1.2(server) |

source 192.168.1.5:3456(client), dest 192.168.1.110:80(VIP), GET /index.html |

| packet of type IPIP, server 192.168.1.2 decapsulates, forwards to 192.168.1.110 |

192.168.1.5:3456(client) |

192.168.1.110:80(VIP) |

GET /index.html |

| reply from 192.168.1.110 (routed via 192.168.1.2) |

192.168.1.110:80(VIP) |

192.168.1.5:3456(client) |

‹html›...‹/html› |

VS-TUN Advantages

-

servers can be geographically remote or on another network

-

higher throughput than NAT (no rewriting of packets, each server has own

route to client)

-

director only schedules. For http, requests are small (GET /index.html),

can direct 100's of servers.

-

total server throughput of Gbps, director only 100Mbps

VS-TUN Disadvantages

-

Server must tunnel, and not arp

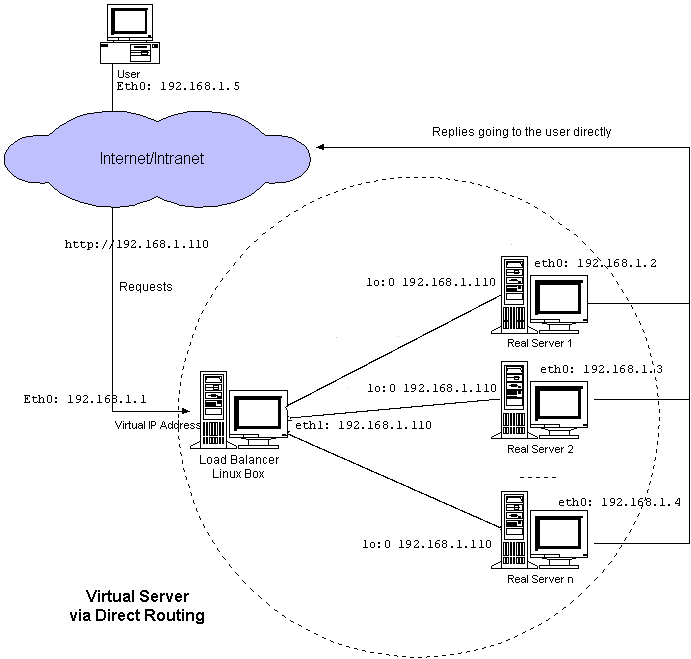

VS-DR Direct Routing

Based on IBM's NetDispatcher

Based on IBM's NetDispatcher

Setup uses same IPs as VS-TUN example on a local network, with

lo:0 device replacing tunl device

lo:0 doesn't reply to arp (except Linux-2.2.x).

Director has eth0:x 192.168.1.110, servers lo:0 192.168.1.110

When sending packets to server, just changes the MAC address for

the packet

Differences:

-

director, servers must be in same network

VS-DR Advantages over VS-TUN

-

don't need tunneling servers

VS-DR Disadvantages

-

lo device must not reply to arp

-

director,servers same net

5. Comparison, VS_NAT, VS-TUN, VS-DR

| property/LVS type |

VS-NAT |

VS-TUN |

VS-DR |

| OS |

any |

must tunnel (Linux) |

any |

| server mods |

none |

tunl no arp (Linux-2.2.x not OK) |

lo no arp (Linux-2.2.x not OK) |

| server network |

private (remote or local) |

on internet (remote or local) |

local |

| return packet rate/scalability |

low(10) |

high(100's?) |

high(100's?) |

6. Local Node

Director can serve too. Useful when only have a small number of servers.

On director, setup httpd to listen to 192.168.1.110 (as with the

servers)

ippfvs -A -t 192.168.1.110 -R 127.0.0.1

7. High Availability

What if a server fails?

Server failure protected by mon. mon scripts for server failure

on LVS website.

8. To Do

-

load-informed scheduling

-

geographic-based scheduling for VS-TUN

-

"heartbeat"

-

CODA

-

cluster manager (admin)

-

transaction and logging for restarting failed transfers.

-

IPv6.

9. Conclusion

-

virtual serving by NAT, Tunnelling or Direct Routing

-

tunneling and Direct Routing are scalable

-

fault tolerant

-

round robin or least connection scheduling

-

single connection tcp,udp services